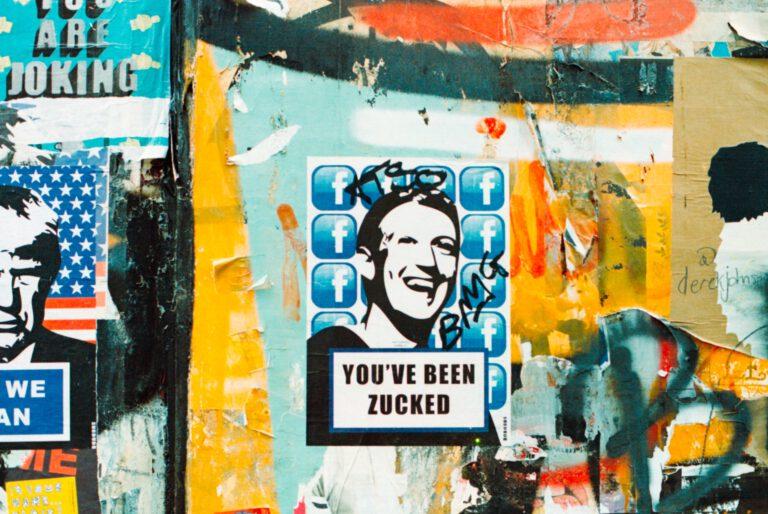

The Value of Your Safety: Facebook’s Neglect of Public Safety for Profit

After numerous previous scandals and trips to the US Congress and other parliaments alike, Facebook has managed to involve itself in yet another controversy. Frances Haugen, a former employee, has released documents to the worldwide press that point out several issues with Facebook’s processes. One of these issues is a continuous choice by Facebook to prioritize profit over safety, according to Haugen. This choice, in turn, leads to a lot of other issues, including Facebook’s algorithms preferring engagement and thus preferring more hateful posts, its special treatment of high-profile users, a host of issues in violent countries, and areas that feature less security measures and moderators due to a lack of language support.

Two major things cause the host of Facebook’s issues: the decisions made by Facebook’s higher ups, and the algorithm Facebook uses and the specific instructions it has given to its machine learning systems. However, while now becoming prominent due to the release of these documents, these issues are not necessarily restricted to Facebook.

Facebook’s management makes choices that put the company and its profits first, rather than public safety. This can, for example, be seen when examining Facebook’s role in and actions surrounding the 2020 US elections. Documents leaked by Haugen show that Facebook employees, over the course of several years, had identified multiple ways through internal research to diminish the spread of political polarization, conspiracy theories, and incitements to violence. However, executives at Facebook decided not to implement suggested steps. Moreover, Facebook’s management decided to roll back many of the precautions that had been taken in anticipation of the 2020 elections once the election had concluded — a decision that would later seem premature due to the insurrection that occurred on January 6th, 2021. What’s more, the almost complete disbandment of the company’s Civic Integrity team was part of these rollbacks. A change which, according to several former employees, was partly enacted because Facebook’s management did not like the criticism this team had brought up. One of the main concerns of executives, according to some of the documents, was that implementing certain measures may have harmed engagement on the platform. In other words, executives worried that implementing measures could negatively impact the platform’s user base and, thus, the company’s finances.

Engagement also highlights issues caused by Facebook’s algorithms. Algorithms can be quite useful if used in the right way — after all, an algorithm might suggest that one really funny cat video that you wouldn’t have been able to find on your own. There isn’t anything inherently wrong with such a harmless example. However, it is important to remember that algorithms care almost solely about the goal that is given to them by their programmers. In 2018, Facebook made a major change to their algorithms, because executives were worried about losing some of the platform’s members. Consequently, the goal for the algorithm would now be to promote engagement, or as Facebook publicly claimed its algorithms would cause more “meaningful social interactions.” The issue with this approach, as Facebook’s own researchers also came to realize, is that Facebook’s algorithm promoted more divisive and angering content after this change. The algorithm picked up on the fact that people would engage with this content more and thus gave this type of content a massive boost. As you can probably understand, this doesn’t help if your goal is to prevent people from flocking to radical and extremist groups on Facebook.

The most interesting part of these leaked Facebook documents, though, is what they show about social media in a broader spectrum. While Facebook’s specific case shows major flaws in its leadership, which obviously only forms decisions for Facebook and its sister platforms, the issues that have been made apparent by the Facebook files are not just restricted to Facebook. It is far easier for social media companies to prioritize profit over safety on their platform. Safety requires costly monitoring and the employment of countless moderators and fact-checkers. This could be a sizable risk as the investments would be unrecoverable if things don’t work out and could cost a company a serious amount of money. Profit, on the other hand, requires less of an effort and could be achieved by a comparatively simple change to the algorithm. It seems that the main issue here is that social media companies have too much discretion on what to do with their platforms. Most of the leaked Facebook documents are actually screenshots taken from the company’s private messaging board, on which employees posted results of their own research and held discussions with each other. The fact that social media companies mostly check their own actions and the consequences of these actions is not inherently wrong, but these same companies being able to decide what to do with private research into their own workings is cause for concern. This ability is ultimately what made the issues highlighted by the Facebook files possible and what could cause further problems in the future, if more social media companies also crave more income.

The documents leaked by Frances Haugen show several integral issues within Facebook. While Facebook generally denies many of the claims made based on the leaked documents, they show a worrying culture within the company. Choices by executives to ignore or not act upon certain issues that currently harm public safety cause direct safety concerns and could lead to perhaps larger cultural and political issues in the future. While Facebook’s situation is certainly worrying, the underlying cause of this situation is perhaps even more so. When social media companies are able to go largely unchecked and unregulated, they may choose to maximize profits at the cost of public safety. Although it is still somewhat uncertain how exactly, this is something that must be tackled within the coming years if a greater polarization of the general public is to be avoided. What is certain, though, is that a name change isn’t going to fix the issues here, “Meta.”