AI: Where Are We Going?

Artificial intelligence is a concept most people have heard of by now. For those of you who do not know what artificial intelligence is, I have asked ChatGPT to answer that question:

AI stands for Artificial Intelligence, which refers to the simulation of human intelligence in machines that are programmed to mimic human cognitive functions such as learning, problem-solving, decision-making, and perception. AI algorithms use vast amounts of data and computational power to identify patterns and insights that can be used to make predictions, optimize processes, and automate tasks. AI can be applied in a wide range of fields, including healthcare, finance, education, transportation, and entertainment, among others. AI technology is rapidly evolving and has the potential to transform many aspects of society in the coming years.

A relatively simple concept, right? Mimic human cognitive functions, as ChatGPT eloquently puts it. Well, it is not quite so simple. The human brain is one of the most complex structures known to man, and that means trying to mimic it is not easy. Therefore, different stages of AI have been identified, to determine how close to a human brain a system really is, or how far beyond.

The first stage of AI is ANI (Artificial Narrow Intelligence). This stage has already been widely introduced in our modern world. It is a form of AI that can only perform one task. It can be far more capable than a human in that specific task, but is incapable of performing any other task. Take for example an ANI chess robot. It is already more capable than the best human, but it will not participate in a game of checkers. These robots have become so good that they have their own competitions, to see which program is best. Another example is the ANI chatbot. Chatbots such as ChatGPT scan many human-written texts, and out of those texts, they can learn what the average human response to a question would be, or what an essay would sound like. These chatbots are currently far from perfect, but the potential for much more accurate bots is great. The bots are also a perfect example of both the greatness and the dangers of AI, as bots are being used to chat with lonely people, and at the same time they can fake essays for students.

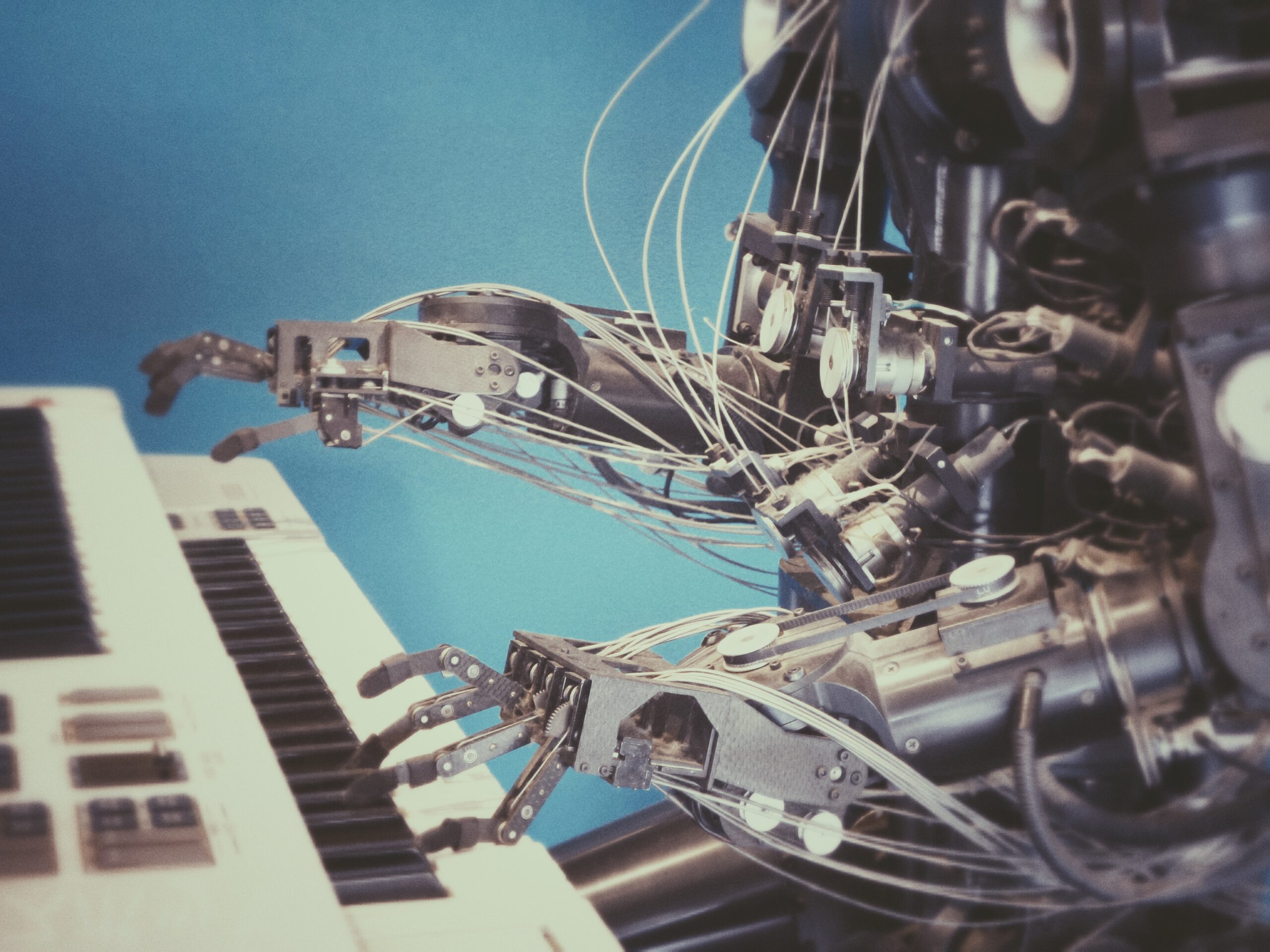

The second stage is AGI (Artificial General Intelligence). AGI can be seen as a system that can understand the world around us as humans do. It can learn not just text, like many of the ANI chatbots today, but it can learn all sorts of inputs, and with those inputs, it can then perform all the tasks a human brain could do with the same inputs. This single system could be capable of throwing a ball precisely into the basket, but would also be able to win in chess, recite literature, plan a camping trip, and have a natural-sounding conversation with a human. AGI does not currently exist, but many projects around the world are seeking to create the first AGI. Companies have already made the first steps toward AGI, with the Gato model from DeepMind being capable of doing 604 different tasks. Of course, this came with the downside of being less capable in those tasks than AI with a single focus model, but AGI is getting ever closer.

The last stage is also the scariest sounding: ASI (Artificial Super Intelligence). ASI is basically AGI, but incredibly superior to the human brain in all regards. Stephen Hawking said that this could possibly be the best or worst development for humans. That is because ASI could theoretically take over the world, like the plots of many sci-fi media. Whether or not humans will ever design a machine that trumps humans in every regard, and whether it will then learn to use its power against us is still up for debate. But ASI has definitely got much potential to change the world.

AI is thought of as the fourth industrial revolution, after the steam engine, electricity, and the computer. It already exists in light forms, which is used for both good and bad. Future systems will be even more capable, possibly becoming entirely independent systems making decisions and taking care of themselves. It seems that the greater the capabilities, the greater the positives and negatives. It is therefore incredibly important that scientists should not just figure whether or not they could, but also if they should. Lawmakers are already struggling to keep up with developments, and they have big decisions to take, otherwise we will be facing a world full of deepfake videos, social manipulation, discriminating biases in the job market, or worse, AI taking over the world.

____________________________________________________________________________________________________

End of Article